#Deep Learning

Using Citizen Science Data as Pre-Training for Semantic Segmentation of High-Resolution UAV Images for Natural Forests Post-Disturbance Assessment

Published in MDPI Forests journal!

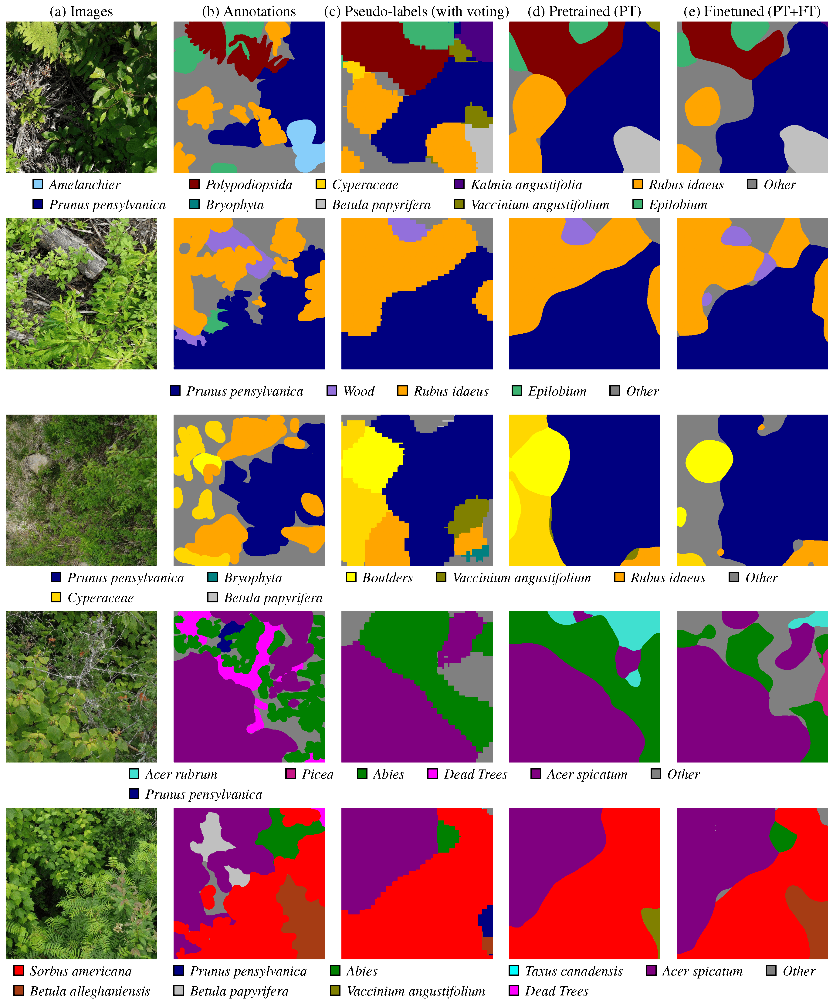

During the last months, I contributed to the paper Using Citizen Science Data as Pre-Training for Semantic Segmentation of High-Resolution UAV Images for Natural Forests Post-Disturbance Assessment, published in the Classification of Forest Tree Species Using Remote Sensing Technologies: Latest Advances and Improvements special issue of the Forests MDPI journal. This paper proposes a novel pre-training approach for semantic segmentation of UAV imagery, where a classifier trained on citizen science data generates over 140,000 auto-labeled images, improving model performance and achieving a higher F1 score (43.74%) than training solely on manually labeled data (41.58%). With this paper, we highlight the importance of AI for large-scale environmental monitoring of dense and vasts forested areas, such as in the province of Quebec.

Artificial Intelligence Resources

Learning resources for anyone interested in the vast domain of AI

Here is a slightly curated list of learning resources and useful links in Computer Vision, Artificial Intelligence and related topics. For resources on robotics, visit the robotics resources post. If you have other great resources to suggest, feel free to contact me.

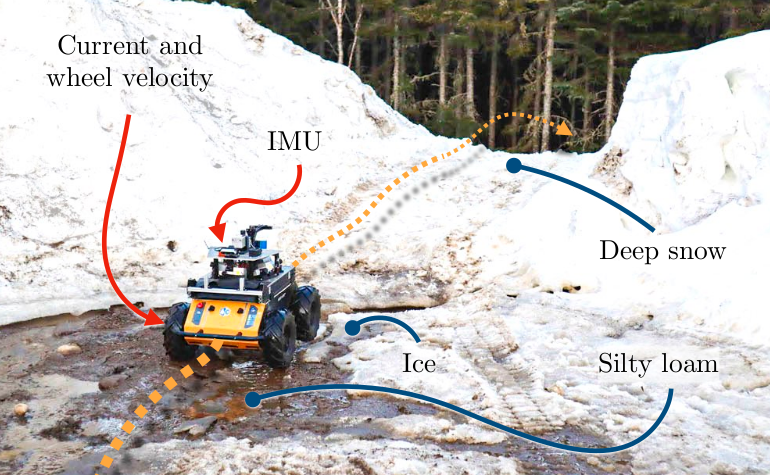

Proprioception Is All You Need: Terrain Classification for Boreal Forests

My paper, Proprioception Is All You Need: Terrain Classification for Boreal Forests, will be presented in the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2024), in Abu Dhabi, UAE. The paper presents BorealTC : a publicly available dataset containing annotated data from a wheeled UGV for various mobility-impeding terrain types typical of the boreal forest. The data was acquired in winter and spring on deep snow and silty loam, two uncommon terrains in an urban setting.